A clean database between each test run is a must for most integration tests. There are a couple ways to achieve this,

Depending on the number of tests, tables or migrations you have, dropping and re-creating the DB might not be the ideal solution. Re-creation of the DB can be optimized by using TEMPLATE databases but it is still relatively slow.

In Carbon Health, our monolithic API contains over 400 tables and thousands of migrations. If we were to use template databases to re-create our DB in every test, we spend around 400 milliseconds every time we drop & create the DB. This is not acceptable considering we have over 4000 integration tests. We would roughly spend around 1600 seconds = 26 minutes just for the DB setup for our tests.

Previously, our solution involved manually cleaning all tables. We are using scalatest library to run our tests. We extend BeforeAndAfterEach trait and use additional utilities to cleanup all tables before and after the tests. The function we use to cleanup all tables was hand-crafted, calling over 400+ SQL queries in form of DELETE from "table". This call was taking around 150–200ms. This is significantly better than re-creating the DB for each test. However, this approach still required manual maintenance of the table list in the ‘delete all’ function used between tests. Also, we always run a lot more queries than we need, which creates significant overhead.

First, we tried using TRUNCATE over DELETE. Unfortunately, it slowed down the query even more. It seems that truncate is faster only when tables contain a considerable amount of data. So we decided to build some mechanism that would only clean the tables that are written to.

Later, we attempted to inject code into the existing repositories to identify which table rows were being modified. The solutions we were able to apply required thousands of file to change, so we abandoned that idea.

After different attempts to solve test slowness, we ended up creating a PostgreSQL extension using PL/pgSQL to track all the tables we are writing to. We have created a function to capture the names of the tables that are modified during test execution.

Later, we needed to add triggers to the existing tables so they would register themselves to the new table called test_access whenever something is added/deleted/updated.

Now, we should also setup a function that will clear the tables that had some row activity.

Now we are ready to setup our access triggers. Right after we create our tables and run migrations for the first time, we execute the following and now we are ready to use triggers.

{% code-block language="pgsql" %}

SELECT setup_access_triggers();

{% end-code-block %}

To use this new fast trigger, all you have to do is call the following before and after each test to leave the database in a clean state:

{% code-block language="pgsql" %}

SELECT delete_from_accessed_tables();

{% end-code-block %}

Later we added a trait that our tests with DB access should extend:

{% code-block language="scala" %}

def clearAccessedTables(): Unit = {

setupTestTriggers()

finishOperation(sql"""SELECT delete_from_accessed_tables()""".as[Int])

}

trait CleanDBBetweenTests extends BeforeAndAfterEach with BeforeAndAfterAll { this: Suite =>

override def beforeAll(): Unit = {

clearAccessedTables()

}

override def beforeEach(): Unit = {

clearAccessedTables()

}

override def afterAll(): Unit = {

clearAccessedTables()

}

}

{% end-code-block %}

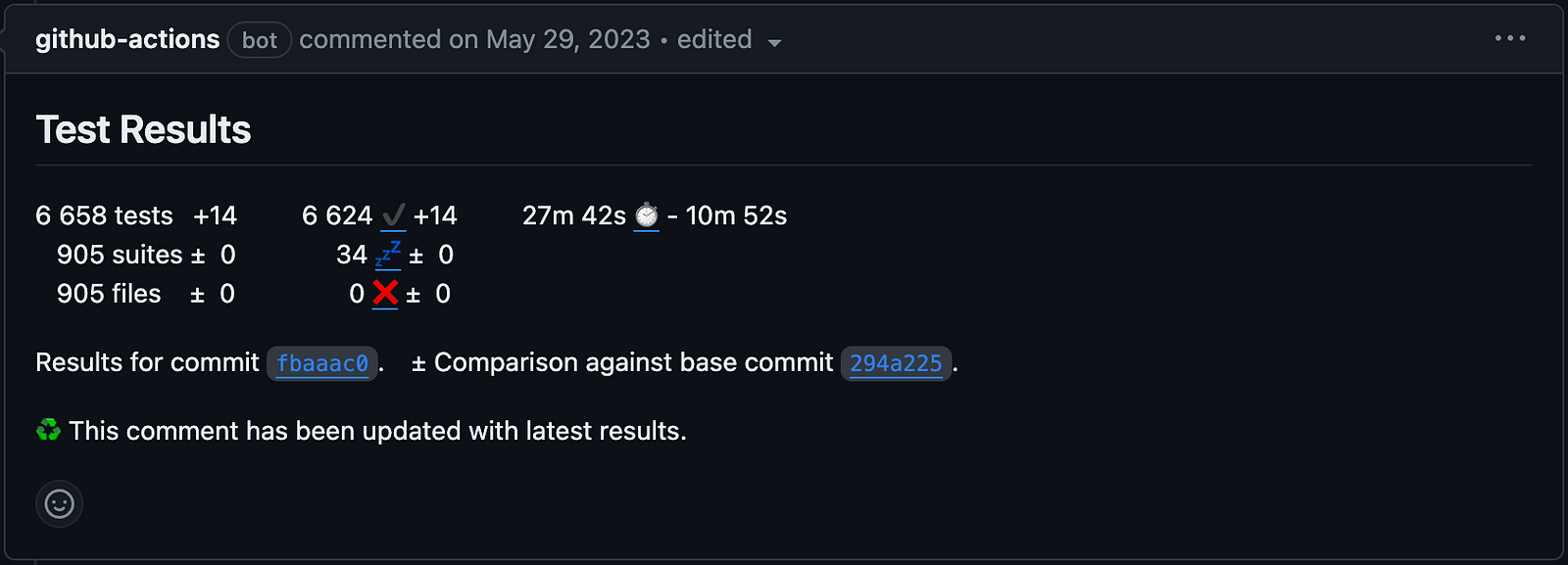

We are very happy with our current approach with database cleaning. It works really well with our current big Monolithic project with over 8000 tests. We had a significant improvement in our test runtimes. It cut down our CI runtime by around 30%.

Test runtime improvement when this change was first applied.

Our repository keeps growing. We are also working on increasing the stability of our app between deployments by writing more tests. Without this change, our current CI runtime would be around 65 minutes as of now.

Even though we have lots of effort towards splitting our monolith, realistically it is not going to happen any time soon and we would like to add more and more tests to ensure our application is stable. Speeding up our CI times didn’t only decrease our bills but it also motivated people towards writing more code and tests as the PR feedback cycle was much quicker.

Moreover, this change helped us resolve some of the flaky behavior while writing tests. The main reason was that we sometimes forgot deleting a certain table, thus execution order of tests affected the outcome. With this change, we don’t have to worry about the cleaning process or maintaining the cleaner code.